Apache Airflow Training Course in Gurgaon

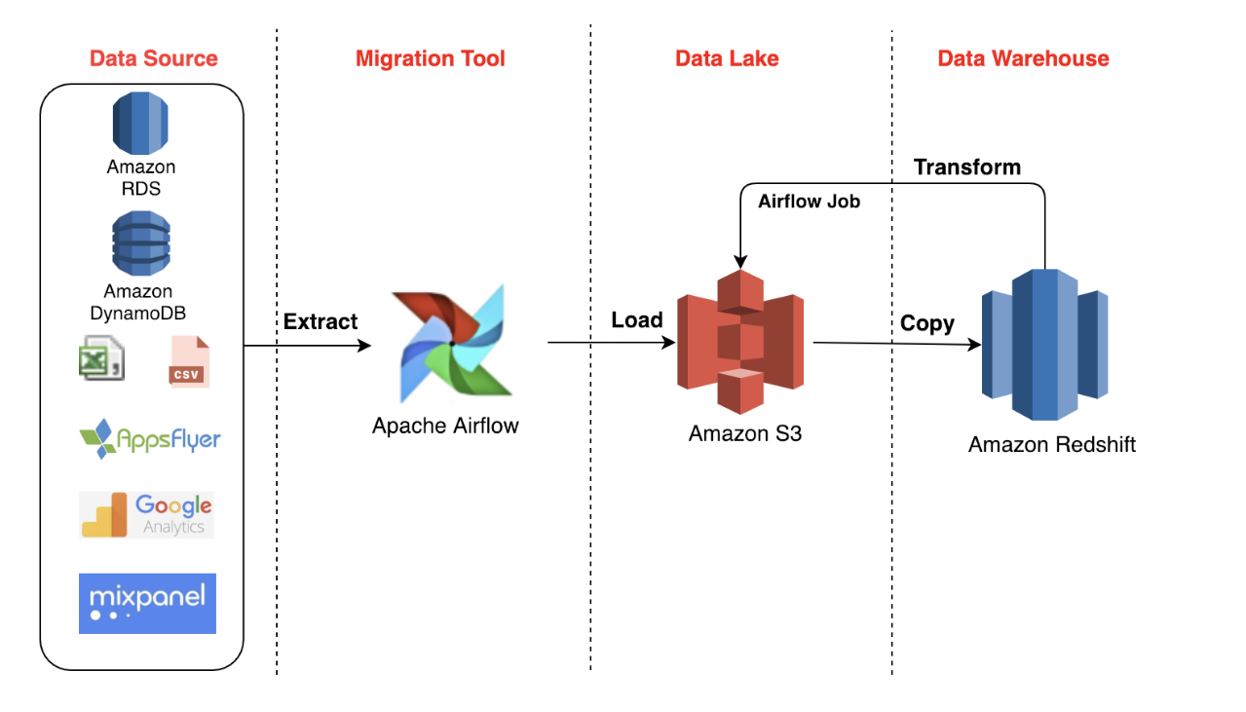

Orchestrating ETL pipelines using Apache Airflow for scheduling and monitoring data pipelines (ETL/ELT pipelines) using Direct Acyclic Graphs (DAG’s). Apache Airflow help data engineers to schedule & monitor ETL workflows & manage ETL job dependencies using the pipeline also it helps to hydrate/ingest data from various data sources using the pre-built connectors available. Apache Airflow pipelines are scalable using Celery executors & also airflow handles pipeline failure effectively using pipeline monitoring tools.

- Develop/Orchestrate ETL pipelines using DAG's templates to automate Data pipeline using Airflow that will speed-up pipeline automation & its deployment.

- Training program will provide interactive sessions with industry professionals

- Realtime project expereince to crack job interviews

- Course Duration - 3 months

- Get training from Industry Professionals

Train using realtime course materials using online portals & trainer experience to get a personalized teaching experience.

Active interaction in sessions guided by leading professionals from the industry

Gain professionals insights through leading industry experts across domains

24/7 Q&A support designed to address training needs

Apache Airflow Training Course Overview

Shape your carrer in Orchestrating ETL pipelines using Apache Airflow for scheduling and monitoring data pipelines (ETL/ELT pipelines) using Direct Acyclic Graphs (DAG’s). Apache Airflow help data engineers to schedule & monitor ETL workflows & manage ETL job dependencies using the pipeline also it helps to hydrate/ingest data from various data sources using the pre-built connectors available. Apache Airflow pipelines are scalable using Celery executors & also airflow handles pipeline failure effectively using pipeline monitoring tools. This training helps to understand how to build automate ETL/Data Pipelines using Airflow on Hybrid Cloud platforms (AWS, Azure, GCP & others) & integrate it with ETL (Glue/PySpark) jobs to to speed, scale & automate infrastructure provisioning.

- Benefit from ongoing access to all self-paced videos and archived session recordings

- Success Aimers supports you in gaining visibility among leading employers

- Industry-paced training with realtime scenarios using Airflow tools (MWAA (Managed Apache Airflow) , Airflow DAG, Cloud Composer & others) for infrastructure automation.

- Real-World industry scenarios with projects implementation support

- Live Virtual classes heading by top industry experts alogn with project implementation

- Q&A support sessions

- Job Interview preparation & use cases

Explain Airflow Engineers?

Airflow Engineers automate Orchestrate ETL pipelines using Direct Acyclic Graphs (DAG’s). Airflow also helps data engineers to schedule & monitor ETL workflows & manage ETL job dependencies using pipeline DAG/orchestrator. It also helps to hydrate/ingest data from various data sources using the pre-built connectors available.

Role of Airflow Engineer?

Airflow Engineers automate automate Orchestrate ETL pipelines using Direct Acyclic Graphs (DAG’s) templates.

Responsibilities include:

- Airflow engineers use Visual Studio & others IDE’s to write DAG scripts to automate ETL pipelines.

- Airflow Engineers manages the end-to-end Data orchestration life cycle using DAG workflows and airflow templates.

- Develop and Design Airflow workflows that automate ETL (GLUE/PySpark/Databricks) job pipelines securely & seamlessly

- Success Aimers helps aspiring Airflow professionals to build, deploy, manage Data Pipelines using DAG templates effectively & seamlessly.

- Deploying Airflow scripts within cloud infrastructure securely & seamlessly.

Who should opt for Airflow Engineer course?

Airflow course accelerates/boost career in Data & Cloud organizations.

- Airflow Engineers – Airflow Engineers manages the end-to-end Data Orchestration life cycle using DAG workflow and airflow sensors.

- Airflow Engineers – Implementing ETL Pipelines using Airflow Tools.

- Airflow Developers – Automated ETL pipeline deployment workflows using Airflow Tools.

- ETL/Data Architect – Leading Data initiative within enterprise.

- Data and AI Engineers – Deploying ETL Application using DevOps automation tools including Airflow to orchestrate pipelines seamlessly and effectively.

Prerequisites of Airflow Engineer Course?

Prerequisites required for the Airflow Engineer Certification Course

- High School Diploma or a undergraduate degree

- Python + JSON/YAML scripting language

- IT Foundational Knowledge along with Data and cloud ETL skills

- Knowledge of Cloud Computing Platforms like AWS, AZURE and GCP will be an added advantage.

Kind of Job Placement/Offers after Airflow Engineer Certification Course?

Job Career Path in Infrastructure(Cloud) Automation using Terraform

- Data Engineer – Develop & Deploying ETL scripts within cloud infrastructure using DevOps tools & orchestrate it by using Airflow & similar tools.

- Airflow Automation Engineer – Design, Developed and build automated ETL workflows to drive key business processes/decisions.

- Data Architect – Leading Data initiative within enterprise.

- Data Engineers – Implementing ETL Pipelines using PySpark & Airflow Tools.

- Cloud and Data Engineers – Deploying ETL Application using DevOps automation tools including Terraform across environments seamlessly and effectively.

| Training Options | Weekdays (Mon-Fri) | Weekends (Sat-Sun) | Fast Track |

|---|---|---|---|

| Duration of Course | 2 months | 3 months | 15 days |

| Hours / day | 1-2 hours | 2-3 hours | 5 hours |

| Mode of Training | Offline / Online | Offline / Online | Offline / Online |

Apache Airflow Course Curriculum

Start your carrer in Data with certification in Airflow Engineer course, that will help in shaping the carrer to the current industry needs that need ETL automation/scheduling using intelligent

workflows like Airflow, Control-M, Apache Oozie & others that allow organizations to boost decision making & also thrive business growth with improved customer satisfaction.

Apache Airflow

Airflow Fundamentals

- Introduction to Airflow and its Architecture

- What is Airflow?

- How Airflow works?

- Installing Airflow 2.0

- Quick Tour of Airflow UI

- Executor

- Workflow

- Task & Task Instances

- Setting Up Airflow Environment and Basics

Important Views of the Airflow UI

- DAG’s View

- Run your First DAG

- Grid View

- Graph View

- Gantt View

- Code View

Coding our First Data Pipeline with Airflow

What is a DAG?

DAG Skeleton

What is an Operator?

- Fundamentals of the Databricks Lakehouse Platform

- Persistence Volume Claim (PVC)

- Fundamentals of Delta Lake

- Fundamentals of Lakehouse Architecture

- Fundamentals of Databricks SQL

- Fundamentals of Databricks Machine Learning

- Fundamentals of the Databricks Lakehouse Platform Accreditation

- Fundamentals of Big Data

- Fundamentals of Cloud Computing

- Fundamentals of Enterprise Data Management Systems

- Fundamentals of Machine Learning

- Fundamentals of Structured Streaming

Types of Operators

- Action Operators

- Transfer Operators

- Sensor Operators

- BashOperator

- PythonOperator

- EmailOperator

- MySQLOperator, SqliteOperator, PostgreOperator

Providers

Creation a Connection

What is a Sensor?

What is a Hook?

DAG Scheduling

Backfilling: How does it work?

Forex Data Pipeline

- Project: Forex Data Pipeline

- What is a DAG?

- Define our DAG

- What is an Operator?

- Check if the API is available - HttpSensor

- Check if the currency file is available - File Sensor

- Download the forex rates from the API – Python Operator

- Save the Forex Rates into HDFS - Bash Operator

- Create the Hive Table – forex_rates – Hive Operator

- Process the forex rates with Spark – SparkSubmitOperator

- Send Email notifications - EmailOperator

- Send Slack Notifications – SlackWebhookOperator

- Add dependencies between tasks

- The Forex Data Pipeline in action

Distributing Apache Airflow

- What is an executor?

- Default Config

- Sequential Executor with SQLite

- Local Executor with PostgreSQL

- Executing tasks in parallel with the Local Executor

- Adhoc Queries with the metadata Database

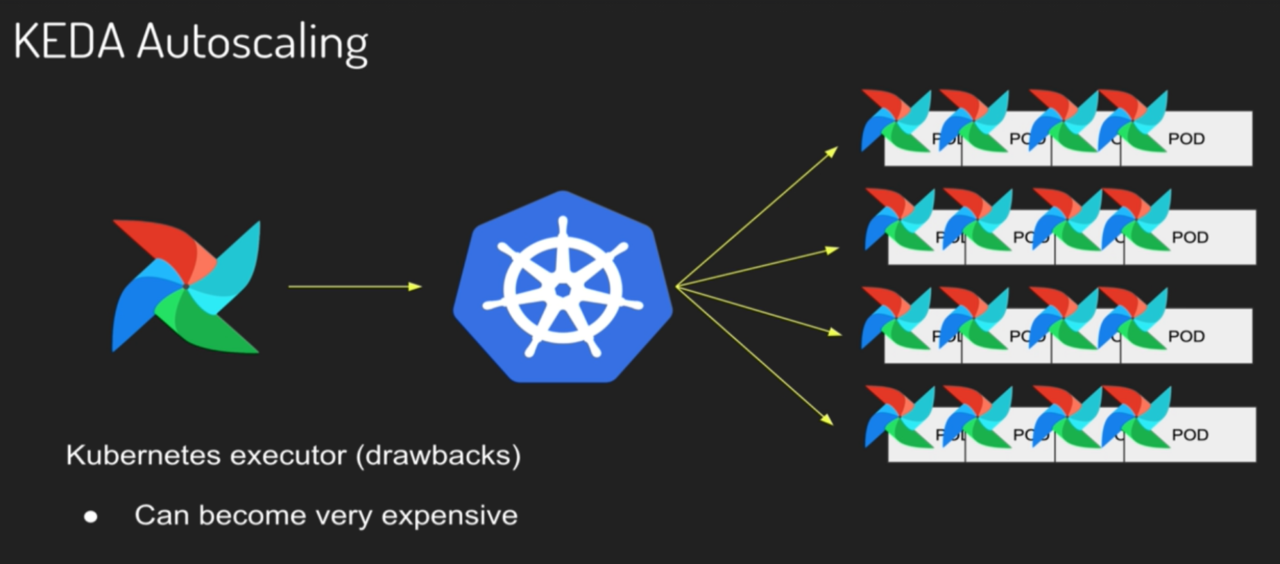

- Scale out Apache Airflow with Celery Executors and Redis

- Distributing our tasks with the Celery Executor

Implementing Advanced Concepts in Airflow

- How to use Sub Dag’s

- Add the DAG xcom_dag.py

- Trigger Rules or how tasks get triggered

Mastering our DAGs

- Introduction

- start_date and schedule_interval parameters demystified

- Backfill and Catchup

- How to make our tasks dependent

- Dealing with Timezones in Airflow

- Creating Task dependencies between Dag Runs

- How to structure our DAG folder

- How to deal with failures in our DAGs

- Retry and Alerting

- How to test our DAGs

Improving our DAGs with Advanced Concepts

- Introduction

- Minimizing Repetitive Patterns with Sub Dag’s

- Making different paths in our DAGs with Branching

- Trigger Rules for our Tasks

- Avoid hard coding rules with Variables, Macros and Templates

- Templating our tasks

- How to share data between our tasks with XComs

- TriggerDagRunOperator or when our DAG controls another DAG

- Dependencies between our DAG’s with the ExternalTaskSensor

- Lab – Column Level Dynamic Data Masking

- What is Row Level Security?

- Lab – Create and Implement Row Level Access Policy

- Dynamic Task Generation

- Task Groups and Dependencies

- Deferrable Operators

- XCom

Performance Optimization

- Managing Task Pools for Resources

- Memory and Compute Intense Jobs

- Scaling for 1000+ DAGs

Advanced Configuration and Troubleshooting

- DAG Processor and Scheduler Service

- Configuring DAG Parse Time

- Custom XCom Backends

DAG Concurrency and Parallelism

- Managing DAG/Task Concurrency Levels

- Parallelism in Workers

- Task Count Decisions

Troubleshooting and Debugging

- Debugging Airflow and GKE Logs

- Managed Composer (GCP) Mechanics

- Issue Identification

Practical Case Studies and Best Practices

- Real-world Use Cases

- Hands-on Workshop with Complex DAGs

- Scaling Strategies

Additional Topics

- Executors and Sensors

- Monitoring and Alerts Integration

- Extending Airflow with Custom Operators

Develop a Data Pipeline using Azure Databricks to ingest data from hybrid sources (API, HTTP interfaces, databases & others) into DW’s & Delta Lake) & orchestrate/schedule it by using Airflow.

Project Description : Data will be hydrated from various sources into the raw layer (Bronze Layer) using Azure Data Factory (ADF) connectors. Further it will be processed through silver layer/table after data standardization & cleansing process. From curated layer (silver layer) DataBricks jobs populate the target layer (gold layer) after business transformation that will derive key business insights. The whole pipeline will be automated by using ETL orchestration tool Airflow.

Project 2

Automated Ingestion Pipeline using Apache DAG Airflow

The Data Ingestion pipeline will be automated through Apache Airflow using Airflow connectors & Sensors components that extract data from AWS S3 & perform data standardization & cleansing using PySpark jobs called within the orchestration pipeline airflow spark connectors that will be finally stored into apache Iceberg tables into AWS before triggering the data flow through the pipeline. Data will be extracted from the source like contact centers & others & this whole pipeline is realtime pipeline that triggers whenever data arrives from the source using airflow connecters that triggers the deployment process.

Hours of content

Live Sessions

Software Tools

After completion of this training program you will be able to launch your carrer in the world of Airflow being certified as Apache Certified Airflow Professional.

With the Airflow Certification in-hand you can boost your profile on Linked, Meta, Twitter & other platform to boost your visibility

- Get your certificate upon successful completion of the course.

- Certificates for each course

- Airflow

- Databricks

- CI/CD

- ETL Orchestration

- PySpark

- Data Pipelines

- Managed Apache Airflow

- Azure Container Registry

- Airflow DAG's

- Airflow Sensors

- Celery Executor

- Kubernetes

- Pipeline Orchestration

- Docker

65% - 100%

Designed to provide guidance on current interview practices, personality development, soft skills enhancement, and HR-related questions

Receive expert assistance from our placement team to craft your resume and optimize your Job Profile. Learn effective strategies to capture the attention of HR professionals and maximize your chances of getting shortlisted.

Engage in mock interview sessions led by our industry experts to receive continuous, detailed feedback along with a customized improvement plan. Our dedicated support will help refine your skills until your desired job in the industry.

Join interactive sessions with industry professionals to understand the key skills companies seek. Practice solving interview question worksheets designed to improve your readiness and boost your chances of success in interviews

Build meaningful relationships with key decision-makers and open doors to exciting job prospects in Product and Service based partner

Your path to job placement starts immediately after you finish the course with guaranteed interview calls

Why should you choose to pursue a Airflow course with Success Aimers?

Success Aimers teaching strategy follow a methodology where in we believe in realtime job scenarios that covers industry use-cases & this will help in building the carrer in the field of ETL/Data Pipelines Orchestration (Airflow) & also delivers training with help of leading industry experts that helps students to confidently answers questions confidently & excel projects as well while working in a real-world

What is the time frame to become competent as a Airflow engineer?

To become a successful Airflow Engineer required 1-2 years of consistent learning with dedicated 3-4 hours on daily basis.

But with Success Aimers with the help of leading industry experts & specialized trainers you able to achieve that degree of mastery in 6 months or one year or so and it’s because our curriculum & labs we had formed with hands-on projects.

Will skipping a session prevent me from completing the course?

Missing a live session doesn’t impact your training because we have the live recorded session that’s students can refer later.

What industries lead in Airflow implementation?

Manufacturing

Financial Services

Healthcare

E-commerce

Telecommunications

BFSI (Banking, Finance & Insurance)

“Travel Industry

Does Success Aimers offer corporate training solutions?

At Success Aimers, we have tied up with 500 + Corporate Partners to support their talent development through online training. Our corporate training programme delivers training based on industry use-cases & focused on ever-evolving tech space.

How is the Success Aimers Airflow Certification Course reviewed by learners?

Our Airflow Engineer Course features a well-designed curriculum frameworks focused on delivering training based on industry needs & aligned on ever-changing evolving needs of today’s workforce due to Data & AI.

Also our training curriculum has been reviewed by alumi & praises the thorough content & real along practical use-cases that we covered during the training. Our program helps working professionals to upgrade their skills & help them grow further in their roles…

Can I attend a demo session before I enroll?

Yes, we offer one-to-one discussion before the training and also schedule one demo session to have a gist of trainer teaching style & also the students have questions around training programme placements & job growth after training completion.

What batch size do you consider for the course?

On an average we keep 5-10 students in a batch to have a interactive session & this way trainer can focus on each individual instead of having a large group

Do you offer learning content as part of the program?

Students are provided with training content wherein the trainer share the Code Snippets, PPT Materials along with recordings of all the batches

AWS Glue Lambda Training Course in Gurgaon AWS GLUE is a Serverless cloud-based ETL service...

Azure Data Factory Certification Training Course in Gurgaon Orchestrating ETL pipelines using Azure Data Factory...

Azure Synapse Certification Training Course in Gurgaon Azure Synapse Analytics is a unified cloud-based platform...

Big Data Certification Training Course in Gurgaon Build & automate Big Data Pipelines using Sqoop,...

Kafka Certification Training Course in Gurgaon Build realtime data pipelines using kafka using Kafka API’s...

Microsoft Fabric Data Engineer Certification Course in Gurgaon Microsoft Fabric is a unified cloud-based platform...

PySpark Certification Training Course in Gurgaon PySpark is a data processing tool that is used...